I am a senior staff software engineer at Google DeepMind working on infrastructure for large-scale training of machine learning models. Before joining Google DeepMind, I was postdoc in the RISE lab at Berkeley where I worked on building a platform for developing more predictable and reliable autonomous vehicles. My research interests lie in developing systems for self-driving cars and robots, distributed systems, operating systems, and large scale data processing.

Before joining Berkeley, I spent my time redesigning the software stack for warehouse scale data centres as part of the Systems Research Group at University of Cambridge. In my research there, I designed and built systems that aimed to increase cluster utilization without affecting performance, as well as easy-to-use large-scale data processing solutions. For my research at Cambridge I received a Google Fellowship in Distributed Systems and an NSDI Best Paper Award. Previously, I graduated with an MEng degree in Computing and Software Engineering from Imperial College London where I was awarded the Microsoft Research Prize for an outstanding master thesis.

In the past, I was fortunate to work on large-scale distributed systems in the cluster management team at Google, in the Data Infrastructure team at Facebook, and at Microsoft Research.

Firmament: fast, centralized cluster scheduling at scale. Berkeley RISELab Seminar 2017

Firmament: fast, centralized cluster scheduling at scale. OSDI 2016

Understanding cluster schedulers -- and why you'll want a better one. ContainerDays 2016

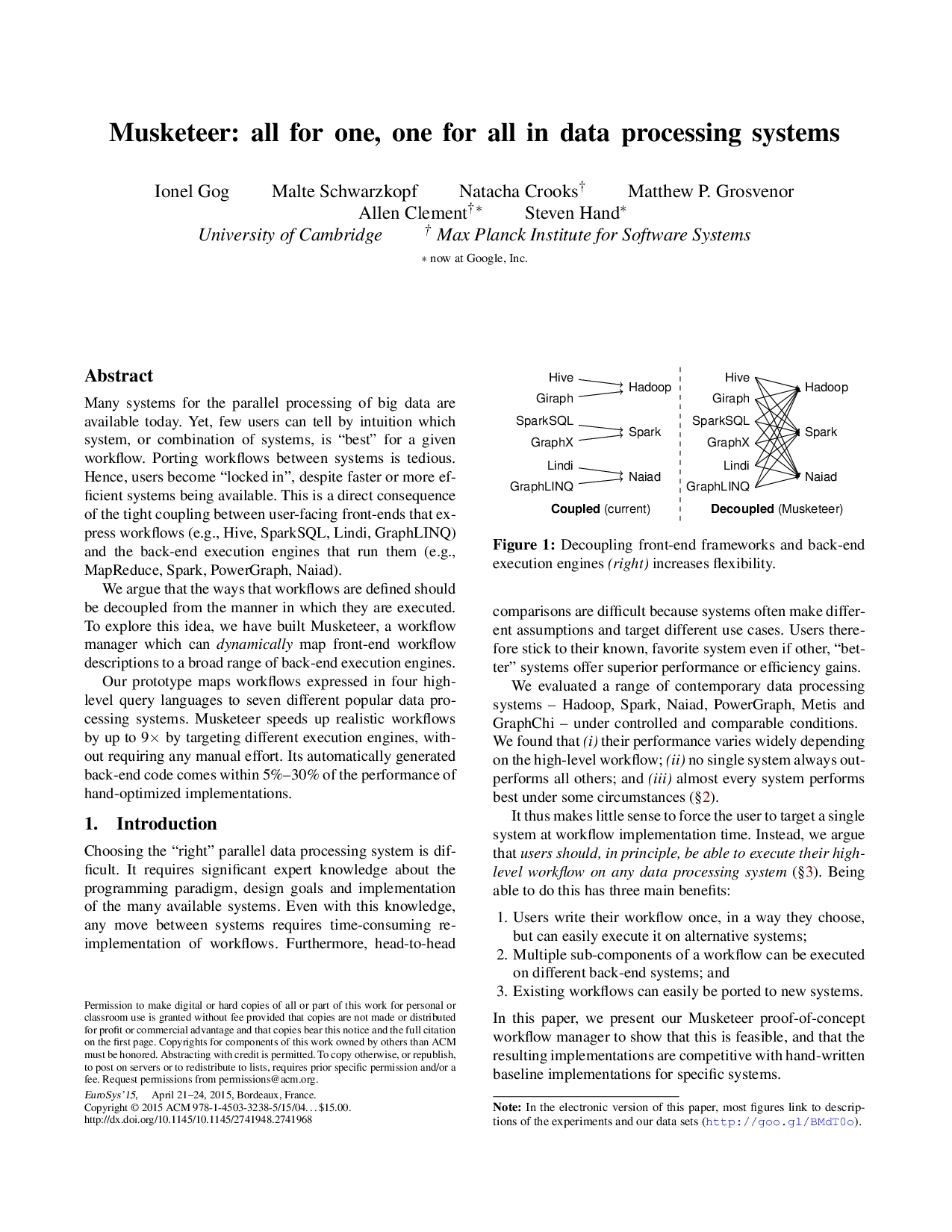

Musketeer: all for one, one for all in data processing systems. EuroSys 2015

Broom: sweeping out Garbage Collection from Big Data Systems. HotOS 2015

High-quality, flexible and scalable scheduling with Firmament. ContainerSched 2015

PC member: USENIX ATC 2021, ERC for ASPLOS 2021, ACM SoCC 2020, ACM EdgeSys 2020, ACM EdgeSys 2019.